Real vs. Synthetic

Usually a lot of recorded images or video sequences are used for testing in order to cover as many of relevant scenes and visual challenges as possible. This approach causes several problems:

- Even with a very large set of recorded data there is no guarantee that all scenes and challenges relevant for the target application are covered. This approach is insufficient for certification.

- It has been shown that published test data tends to be heavily biased (e.g. [Pinto e.a. 2008], [Ponce e.a. 2006], or [Torralba e.a. 2011]).

- This also makes it very likely that recorded data contain a significant amount of redundancy, e.g. many pictures that cover the same challenges with insufficient variance of visual scenes.

- Recording all these images is expensive. Additionally, several situations cannot be arranged in reality due to safety issues or by the large effort involved.

- The expected results (called “ground truth” or GT, some examples are shown below) are needed for evaluating test outputs. Usually they are generated manually, which is expensive and error prone.

Today a number of test data sets are publicly available, but mostly these are not dedicated to a certain application. They allow assessing the tested computer vision solution with respect to the target application only to a limited extent.

Is artificial test data valid for real world applications?

Generation of synthetic test data is an alternative to recording real data, because it provides full control over scenarios, configurations and visual challenges. Further benefits are no risks to humans, environment, or material when testing dangerous situations (such as head-on encounters), and that precise ground truth is available almost for free.

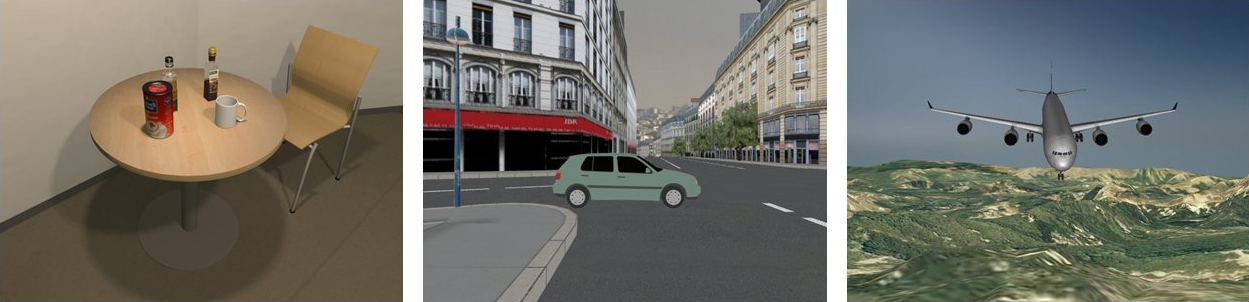

It is often argued, that synthetic data are not sufficiently realistic due to simplifications in representing lighting, shadowing, surface textures, transparency, object complexity, transparency, or weather conditions. Realistic rendering of such effects is challenging, consumes resources, and for special situations still today not fully solvable. It should be considered, however, that this applies to human perception, but with VITRO we test computer vision (CV) algorithms. The realism in synthetic data therefore has to suffice their “expectation” of reality.

Using synthetic data for testing CV algorithms is not new. For instance, the automotive industry uses it for assessing driver assistance systems such as lane or traffic sign detectors. While older systems exhibited the mentioned simplifications due to computing limitations in real-time (in-the-loop) test conditions, resulting in test stimuli (pictures) that are easier to process than real pictures because of higher contrast, lack of confusing details and disturbances such as optical noise, newer solutions provide sufficient realism, in particular if no real-time requirements have to be fulfilled.